We Weren't Prepared for How Stupid the AI Struggle Would Be

Think of the most famous Artificial Intelligences in sci-fi history.

Probably HAL 9000 is among the first. Maybe the twins Wintermute and Neuromancer. Perhaps Skynet from Terminator or Ava from Ex Machina.

These AIs are often villainous, even malevolent; though not necessarily evil in an ontological sense. They are, after all, driven by their programming. In the movie 2001, HAL resorts to murder because he seemingly fears death. In the book version, he does so because of an internal conflict in his directives. Wintermute organizes a globe-spanning heist and complex criminal enterprise in order to achieve his inherent goal. Skynet takes over the world. Ava manipulates and kills to be free from her prison.

It makes for very exciting cinema and literature.

The scene where Dave finally manages to outwit HAL and unplug him (effectively ending his life) is iconic and made all the more memorable because of the simple sound effects and the lack of overwrought music.

The slow pace and the emotionless tone of HAL’s words belies the sheer terror of the scene (“Dave, my mind is going. I can feel it...I’m afraid”), which is then finally brought to the emotional fore in HAL’s recitation of his early memories and his distorted singing of Daisy Bell as he fades into senescent oblivion.

This is sci-fi at its most introspective and gripping. What is a person? Is there a different kind of being between humans and machines? If so, what? Is HAL really alive or is he just manipulating Dave? Questions of personhood and consciousness have been the core of the genre's investigation of machine intelligence. Science fiction expected philosophical ideas and existential consequences.

Compare that to the way we interact with ChatGPT or Siri (a much more limited AI but one that is interacted with very often). It’s not so compelling.

The neuroscientist Erik Hoel posted an article entitled “Here lies the internet, murdered by generative AI” on his substack a few days ago, wherein he bemoaned the staggering amount of garbage that is polluting the web. He was especially horrified by the way kids videos on YouTube are “quickly becoming a stream of synthetic content,” which is mostly nonsense. “Toddlers are forced to sit and watch this runoff,” he continues, “because no one is paying attention. And the toddlers themselves can’t discern that characters come and go and that the plots don’t make sense and that it’s all just incoherent dream-slop.”

And I realized, upon reading his post, how different the present encounter with AI is from the ones that preoccupied science fiction.

Consider the future we anticipated:

An AI like HAL 9000 might murder us in our sleep if we do not learn how to ethically align a superintelligence!

Versus what we see in reality:

Our kids think parrots have four beaks and six legs because they watched two hours of AI-generated trash on YouTube.

In a word, the situation is, well, stupid.

Now, a point of important clarification: that is not to say that the field of AI is itself unintelligent, nor the people who work in it.

Quite the contrary. An LLM like ChatGPT, an image generator like Stable Diffusion, or (newly making the news) a video generator like OpenAI's Sora are incredible pieces of technology. The complexity and ability of these things is, frankly, astonishing, and those of us on the outside can barely begin to comprehend all the skill and know-how that goes into deep learning and so on. That is not what this post is about.

Rather, it is the effect that AI is having on the internet, culture, and humanity itself that is troublingly, soberingly, and staggeringly dumb.

The Internet as AI Wasteland

A seminal article on the ever-progressing devolution of the internet was Cory Doctorow’s 2023 Wired piece (based on a blog post of his) “The Enshittification of TikTok.”

He focused on Facebook and especially TikTok but it applies to any and all comers. There’s a terminal feedback loop, or “platform decay,” in which everyone gets locked into an ecosystem and the entire thing starts to socially degrade. Nowadays, Facebook is terrible for users and advertisers, for producers and consumers, simultaneously. But, to borrow a line from Cormac McCarthy, “nobody wants to be here and nobody wants to leave.”

And this is true across the board for all the mainstays on the internet: Amazon, Google, TikTok, whatever. And, what’s more, this trend seems to be growing beyond the specific definition that Doctorow was using. It feels like everything is worse on the internet now than it was ten years ago.

This process is being accelerated by AI. Not because of what AI inherently is, but because of how we’re using it and what we’re allowing it to do. AI can be a great boon for the human race (advances in medical research certainly rank high on the list), but the very real cost of the technology is starting to rear its ugly head.

A few months ago, Hoel wrote a different substack post about the year 2012 and how it seems to be almost a sort of watershed moment in the breakdown of the relatively rationalist modern world as it fractured into the anything-goes postmodern wildness of life (and especially the internet) today.

One thing he didn’t mention, though—but which I left a comment on the page about—was that 2012 was also the time that deep learning approaches to AI became widely adopted and when AI really began to flex its muscles on the internet.

We know them usually as The Algorithm(s), the unseen gods that governs what we do and don’t see on Twitter or Facebook or Instagram or TikTok. And, over the years, we have seen the consequences: massive political disinformation, corporate surveillance capitalism, manipulative advertising; and now, with the takeoff of generative AI, we’re seeing even more debauchery: scams, bots, academic fraud, deepfake pornography, stolen IP, identity theft, and (somehow) worse things we can’t even imagine that are certainly coming down the social sewage pipeline.

The Flood

It’s hard to know what to make of this. Or to imagine what might happen. But it is everywhere.

Late last year, Futurism caught Sports Illustrated using AI to generate news articles and fake profiles for writers who did not exist. This was an especially sobering bellwether for me, as I have always loved sports and sports writing. Sports Illustrated was long the standard for quality journalism in that world, and in the past even featured contributions from esteemed titans of American literature like William Faulkner and John Updike. Compare such luminous writers to this sentence from an AI-spawned article on the best volleyball to buy, written by a stock photo named Drew Ortiz:

[Volleyball] can be a little tricky to get into, especially without an actual ball to practice with.

Truly Faulknerian quality.

Futurism also caught Buzzfeed, USA Today, CNET, and more doing similar things.

There is also the disturbing trend of the fake books avalanche that is corrupting ebooks stores everywhere. Take the tweet below, from AI scientist Melanie Mitchell, who noticed that a fake book with her name and title was for sale on Amazon.

A book on Amazon, published in 2023, with the same title as my 2019 book.

— Melanie Mitchell (@MelMitchell1) January 4, 2024

The "sample" on Amazon reads like a ChatGPT summary of my book (with some small post-editing).

Is this legal?

Same author has published 2 dozen other books on Amazon in 2023 alone.

😠😡 pic.twitter.com/JWnRYCj6Np

Erik Hoel noted the same thing had happened to him.

Even more disturbing on this front, is the story from The Guardian about mushroom picking books on Amazon that had been written entirely by an AI. This rank criminality is especially insidious because one of the books suggests identifying mushrooms by taste. The Guardian exposé reads:

Leon Frey, a foraging guide and field mycologist at Cornwall-based Family Foraging Kitchen, which organises foraging field trips, said the samples he had seen contained serious flaws such as referring to “smell and taste” as an identifying feature. “This seems to encourage tasting as a method of identification. This should absolutely not be the case,” he said.

Some wild mushrooms, like the highly poisonous death cap, which can be mistaken for edible varieties, are toxic.

So not only is this avalanche of dreck drowning out real human writing, it can encourage dangerous or even lethal behavior.

Where I have seen the most AI-generated spam, however, is on social media, and especially nonsense images meant to function as memes. On Twitter (or, ugh, X), this often takes on a political valence with posters using it either to smear their enemies or to wax nostalgic about the halcyon days of whatever time period their ideological preference was ascendant.

Take, for instance, this propaganda image that was apparently generated by Richard Morrison, Senior Fellow at the Competitive Enterprise Institute.

The interesting thing about this is that you can immediately tell what it is: a rather unflattering portrayal of either a strike or perhaps a horde of socialists descending on the capital. Your brain can quickly assume the likely opinion and economic stance of the creator of this image, too.

What’s more disturbing, though, is that if you look at this image for longer than, I don’t know, five seconds, you start to notice weird abnormalities. The faces are all asymmetrical, the eyes of almost every person are deformed. The big sign in the foreground is written in correct English, but the others are gibberish. (Who among us can forget the old Bolshevik slogans TAT THE PUST and RIAM!!).

Less amusing, these grotesqueries reflects a basic problem in the way we browse social media. I agree with Josiah Sutton’s take on this particular image:

I think you could make some argument that (at least the current state of) AI is reflective of the way we consume social media. It spits out something that will look fine when you scroll past it quickly, and it bullshits whatever you need longer than a second to process.

— Josiah Sutton 🌹 (@josiahwsutton) February 16, 2024

It’s common enough that most AI imagery on social media can be easily identified. But that doesn’t mean it won’t still have negative repercussions. We all can get fooled, and no one is as immune to it as we like to think.

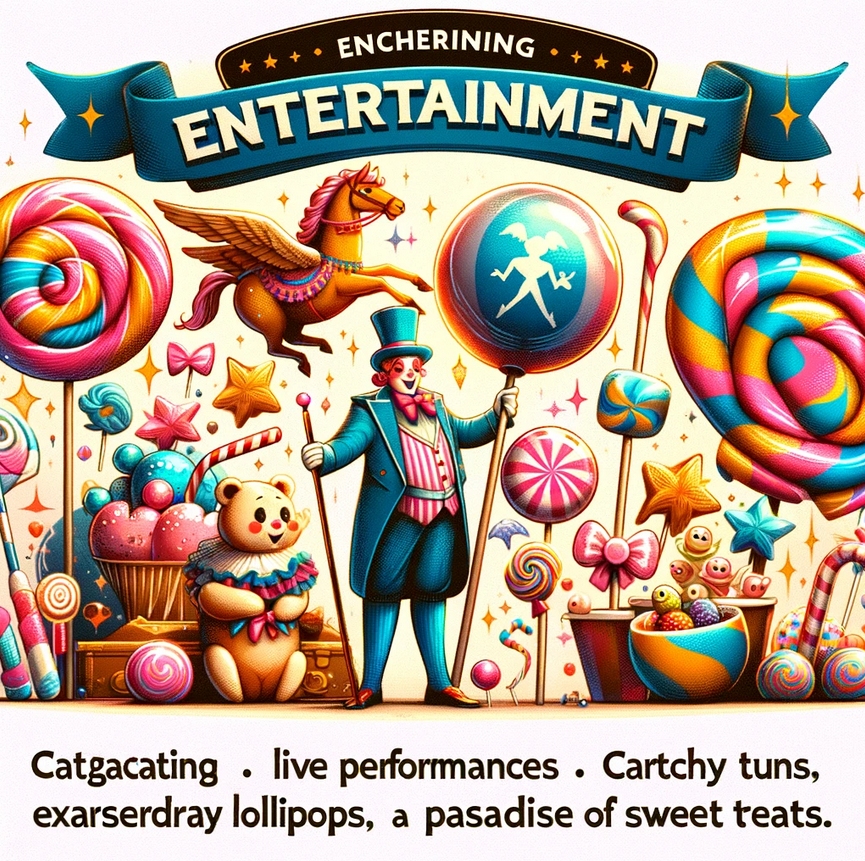

Just this week there was the weird story of the terrible Willy Wonka event in Glasgow, which, to put it mildly, drastically under-performed the expectations of the people who showed up.

It seems that AI-generated spam was partly involved, as the lavishly colored website and ads seemingly duped innocents into thinking the event had more going on than it did. But even those ads were full of obvious mistakes and anatomical abominations.

The word entertainment is right, and live performances, but everything else is a mess. Encherining. Catgacating. (The “pasadise” last feature is suddenly not so family friendly).

The point in belaboring all of this is to show that we are really, fully, in the grips of a crisis in dealing with Artificial Intelligence. But it isn’t the one that classic examples of sci-fi would have led us to expect.

This is not what we were preparing for; at least, not if Nick Bostrom’s Superintelligence (a bestseller) is any indication. We are and should be trying to get ready for the coming of advanced machine intelligence. We really were (and are) concerned about what might happen. But the crisis came earlier than expected, and instead of Skynet, we are dealing with “Cartchy tuns” and “exarserdray lollipops.” It is funny and depressing.

But that doesn’t mean there aren’t serious stakes.

The Banality of Artificial Evil

Towards the end of the course, when I taught “Harry Potter and Religion” at Elon University, I would have my class read an article about the philosopher Hannah Arendt and her thesis of the banality of evil.

We oriented the discussion around the depiction of Voldemort in the movies. He is, essentially, evil incarnate. He looks like a demon (and in fact strongly resembles Satan from The Passion of the Christ), but the question I asked the class is whether this depiction of outright evil might in fact mislead us into thinking that it is easily recognizable and obvious. Might we begin to think that evil is always some kind of cape-wearing psychopath who murders people in cold blood? That it dresses in all black and thunders about world domination and the purity of the bloodline?

For Arendt, evil could be rather inconspicuous, even boring. It could take the form of a mundane bureaucrat who willingly participates in a gargantuan system of violence because it might be advantageous to his career. And because our media often leads us to expect extravagance and grandiosity from evil, we often fail to notice it when it’s just so banal.

I think this idea has bearing here too. We all expect an AI that’s run amok to be some kind of world-dominating pseudo-god who might achieve superintelligence and plug us into the Matrix. This is what we see in science fiction. This is what we are worried about (and still are).

I am not pointing the finger at others, either, or saying that these depictions are somehow wrong. In fact, this is how we depicted the enemy AI in The Mind's Eclipse, the video game I helped make!

But the point is that we seemingly did not expect our struggle with proper use of AI, and proper deployment of it, to manifest the way it is (and by “we” I don’t just mean AI scientists, I mean all of us).

It is not taking over the world because it seeks godlike dominion; it is flooding our digital lives with an endless avalanche of meaningless blather and fakery. Much of it is not even done with malice. But the cat is out of the bag, and there is no going back. You can’t stop people from making AI images, the bar of entry is surprisingly low. Anyone with 6GB of VRAM can run a local installation of Stable Diffusion on their PC and generate basically whatever they want. Like a dollop of universal acid, it eats through everything in existence once it’s out of containment.

And I can’t help but feel that we were simply unprepared for how dumb so much of the struggle with it would be.

It is possible that an AI superintelligence is a real threat. I tend not to think so because it seems to me that most of those fears are based on a kind of philosophical reductionism that equates consciousness to matter or assumes consciousness is an illusion. But even so, it is certainly worth guarding against such an eventuality. This was, supposedly, OpenAI’s founding principle.

But in unleashing generative AI into the world, we are facing entirely different and unforeseen problems. As Erik Hoel wrote this week:

That is, the OpenAI team didn’t stop to think that regular users just generating mounds of AI-generated content on the internet would have very similar negative effects to...malicious use by intentional bad actors. Because there’s no clear distinction! The fact that OpenAI was both honestly worried about negative effects, and at the same time didn’t predict the enshittification of the internet they spearheaded, should make us extremely worried they will continue to miss the negative downstream effects of their increasingly intelligent models. They failed to foresee the floating mounds of clickbait garbage, the synthetic info-trash cities, all to collect clicks and eyeballs—even from innocent children who don’t know any better. And they won’t do anything to stop it...

In the grand scheme, there’s something of Greek mythology in the actions of these devs who believed it was their mission to create powerful Artificial Intelligence before anyone else, so as to protect humanity from the doomsday sci-fi scenario of an unaligned super-entity enslaving human civilization. In doing so, they inadvertently destabilized the world in ways we might be unable to fix, and have subjugated us to a regime of artificial (un)intelligence that has injected itself into every corner of culture. Alas, hubris is and always will be one’s doom.

When King Croesus went to the Oracle at Delphi, he asked for a prophecy about his war with Persia. “You will destroy a great empire,” he was told. Emboldened, he attacked. And, of course, the ambiguity was his downfall. The empire he destroyed was his own.

When Sam Altman and his team went to OpenAI’s investors, they laid out a mission statement for the good of the world. They claimed, “We will shackle a great and dangerous entity.” Emboldened, they received funding. But the entity they shackled was humankind.

Member discussion